The Hidden Privacy Risks in AI-Enabled Financial Advisory Tools & How You can Improve Trust In Your Service

AI is reshaping financial services at every level. Specialized AI tools for financial planning (Datarails, Cube), market intelligence (AlphaSense, Kensho), portfolio management (Nitrogen, SigFig), and operations and compliance (AdvisorEngine, Quantivate) are reshaping how financial advisors and everyday people interact with their money. At the same time, general-purpose AI platforms like ChatGPT and Gemini are empowering teams with faster content generation, research assistance, and idea brainstorming.

It is undeniable that the efficiency gains, deeper insights, and new pathways to customer engagement are significant, yet beneath the promise lies a growing privacy and security crisis. Consumers are oversharing personal data with AI systems without their explicit knowledge, many of which may not be adequately secured. Financial institutions are under increasing regulatory scrutiny to protect sensitive information and demonstrate compliance. Meanwhile, criminals are exploiting deepfakes, voice cloning, and generative AI to commit fraud at an unprecedented scale.

Here we explore the hidden data privacy risks involved with AI in financial advice, highlighted by real-world examples of AI-enabled fraud, and strategies financial institutions can adopt to safeguard consumer trust and offer better transparency.

There is a Double-Edged Nature to AI Advice

AI-powered financial tools offer enormous benefits. They provide instant insights, simplify retirement planning, offer personalized investment guidance, and even automate routine financial tasks. For consumers, these platforms can feel like having a personal advisor available 24/7. For institutions, they promise operational efficiency, better decision-making, and cost savings.

Yet, the very convenience that makes AI attractive also creates significant risks. Unlike licensed financial advisors, AI systems are not bound by fiduciary duty or strict privacy laws. They may process sensitive personal and financial information without the same regulatory safeguards that govern human advisors.

The implications are serious. Users often assume that AI interactions are private, but conversations may be stored, logged, or analyzed to improve models. If accounts are breached, shared information can be exposed to unauthorized parties, providing cybercriminals with the raw material needed for identity theft, account takeovers, or fraud.

Even seemingly innocuous prompts can inadvertently reveal personal patterns, risk tolerance, or investment strategies. Financial institutions face a dual challenge: they must balance the adoption of AI tools to remain competitive, while ensuring that consumers’ sensitive information remains secure. Without clear governance, user education, and robust privacy safeguards, AI can shift from a powerful ally to a significant liability.

Research underscores the magnitude of exposure:

- Over 4% of AI prompts submitted by users contain sensitive information such as account numbers or Social Security numbers.

- Over 20% of uploaded files to AI platforms include confidential or personal data.

- AI conversations may be stored, analyzed, or exposed if the system is breached, creating significant identity theft and fraud risk.

The Rise of AI-Driven Fraud

Generative AI supercharges fraudsters’ ability to expose consumers sensitive information through oversharing. The tools once reserved for sophisticated state actors are now available to everyday criminals. With generative models able to produce convincing text, audio, and video, trust itself is becoming a liability in digital finance.

Case Study: A finance worker in Hong Kong was tricked by a deepfake video conference. A financial firm claimed a $25 million loss after criminals used a deepfake to impersonate its CFO during a video conference. Every participant appeared real, but the entire meeting was fabricated. The incident highlights how even seasoned professionals can be deceived when fraud is packaged in a familiar, human-like format.

Beyond corporate scams, AI-powered voice cloning is fueling a wave of consumer-targeted fraud. With just a few seconds of recorded speech, attackers can impersonate a family member or banker, tricking victims into transferring funds or revealing account details. The social engineering risk is magnified when combined with data leaks, where criminals already possess personal identifiers like addresses and financial account numbers.

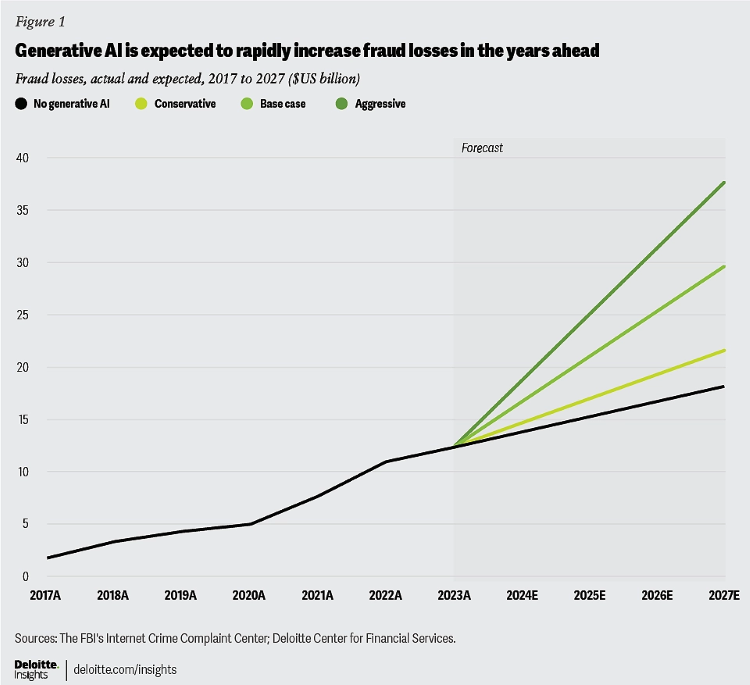

The financial impact is staggering. In 2024, U.S. consumers lost an estimated $16.6 billion to scams, a 33% increase from the year before. According to an analysis from Deloitte, they predict that generative AI will enable fraud losses to reach $40 billion annually in the United States by 2027, up from $12.3 billion in 2023.

Juniper Research forecasts that global financial institutions will face $58.3 billion in fraud costs by 2030, a sharp increase from $23 billion in 2025. This rise is primarily fueled by synthetic identity fraud and other AI-enhanced attack methods. According to a report by Sift, AI-driven scams contributed to an estimated $1 trillion in total global scam losses in 2024, a figure that is not slowing down.

For banks and investment firms, this means not only heightened fraud risk but also reputational damage and regulatory scrutiny.

Why AI Fraud is Hard to Detect

- Deepfakes can bypass traditional identity verification systems.

- Voice cloning requires only a few seconds of audio.

- Fraud detection models are struggling to keep pace with generative AI.

- Even experienced professionals are susceptible to AI-powered deception.

Privacy-Centered Defenses

Financial institutions have a responsibility to protect consumer data and ensure that AI adoption enhances trust and doesn’t erode that security. This requires rethinking both technical safeguards and organizational commitments to privacy by design.

Institutional Responsibilities

- Federated Learning for Data Protection: Instead of centralizing massive consumer datasets, federated learning allows institutions to train fraud-detection models on-device or across distributed systems. This approach reduces the risk of catastrophic breaches while still benefiting from AI-driven insights.

- Explainable AI for Accountability: Opaque models undermine consumer trust when fraud alerts or account decisions cannot be explained. By adopting explainable AI, institutions make risk scoring and fraud detection processes transparent, giving consumers confidence that decisions are fair and defensible.

- Authentication Beyond Biometrics: Deepfakes have shown the vulnerabilities of voice and facial recognition. Institutions must employ multi-layered authentication strategies—combining device intelligence, behavioral biometrics, and tokenized factors—to ensure resilience against synthetic identity fraud.

- Minimization by Design: Privacy-centric defenses require limiting the collection and retention of consumer data. By embracing data minimization, pseudonymization, and encryption at every stage of processing, institutions can reduce both compliance exposure and consumer harm in the event of compromise.

Regulatory Alignment

Financial institutions must get ahead of evolving regulatory standards:

- Transparency in AI Usage: Clearly disclose when and how AI is used in financial decision-making and fraud detection.

- Deepfake Safeguards: Develop and adopt systems capable of detecting and labeling synthetic media before it can be used for fraudulent activity.

- Global Standards Convergence: As GDPR, the EU AI Act, and U.S. state privacy laws (California, Colorado, New Jersey, Delaware) tighten requirements, forward-looking institutions should prepare for harmonization rather than piecemeal compliance.

- Auditable Privacy Practices: Establish internal frameworks for documenting, auditing, and reporting AI use cases, ensuring that privacy risks are proactively mitigated.

Privacy-Centric AI in Banking

- Federated learning reduced the volume of customer data shared between systems by 80% in banking pilots.

- Explainable AI adoption correlates with a 20% increase in consumer trust scores in financial services.

- EU regulators now require human-interpretable risk explanations for high-risk AI systems under the AI Act.

Why the Financial Industry Needs Clarip

The financial industry is under pressure from three sides: accelerating AI fraud, growing regulatory scrutiny, and rising consumer expectations for privacy. Institutions cannot simply layer on more security; they need integrated governance.

Clarip provides that foundation. Our platform enables:

- Data Mapping & Monitoring: Identify where sensitive financial data lives across systems and vendors.

- Consent & Rights Management: Demonstrate accountability under evolving privacy laws.

- Integrated Fraud & Privacy Intelligence: Align fraud prevention with privacy compliance to reduce both regulatory and reputational risk.

By unifying data governance, privacy, and compliance into a single platform, Clarip helps financial institutions not only survive the AI fraud crisis but build a competitive advantage around trust.

Case Study: When a global retailer faced regulatory action for mishandling privacy opt-outs, Clarip’s compliance platform has proven enabled rapid remediation. Within weeks, several corporations have demonstrated compliance improvements–some of whom later were recognized as leaders of industry best practices. The same approach can equip financial institutions to respond quickly to emerging AI privacy threats.

The future is AI-Driven Advice and Enhanced Privacy!

The future of financial services will be powered by AI, but trust will be the currency that determines winners and losers. Institutions that fail to address AI-driven privacy and fraud risk losing consumer confidence and regulatory standing. Clarip enables financial organizations to turn compliance into a cost-saving, trust-building strategy. By embedding privacy at the heart of AI adoption, the industry can harness innovation without sacrificing security.

Clarip takes enterprise privacy governance to the next level and helps organizations reduce risks, engage better, and gain customers’ trust! Contact us at www.clarip.com or call Clarip at 1-888-252-5653 for a demo.

Email Now:

Mike Mango, VP of Sales

mmango@clarip.com

Related Articles:

Data Privacy and the Future of Digital Marketing

US Privacy Law Tracker

Understanding US Data Privacy Law Fines

Evolution of digital consent and preferences

What Is GPC (Global Privacy Control), And why does it matter?

Data Risk Intelligence

Data Risk Intelligence Automated Data Mapping

Automated Data Mapping Do Not Sell/Do Not Share

Do Not Sell/Do Not Share Cookie Banner Solutions

Cookie Banner Solutions Consent & Preferences

Consent & Preferences Data Rights Requests

Data Rights Requests